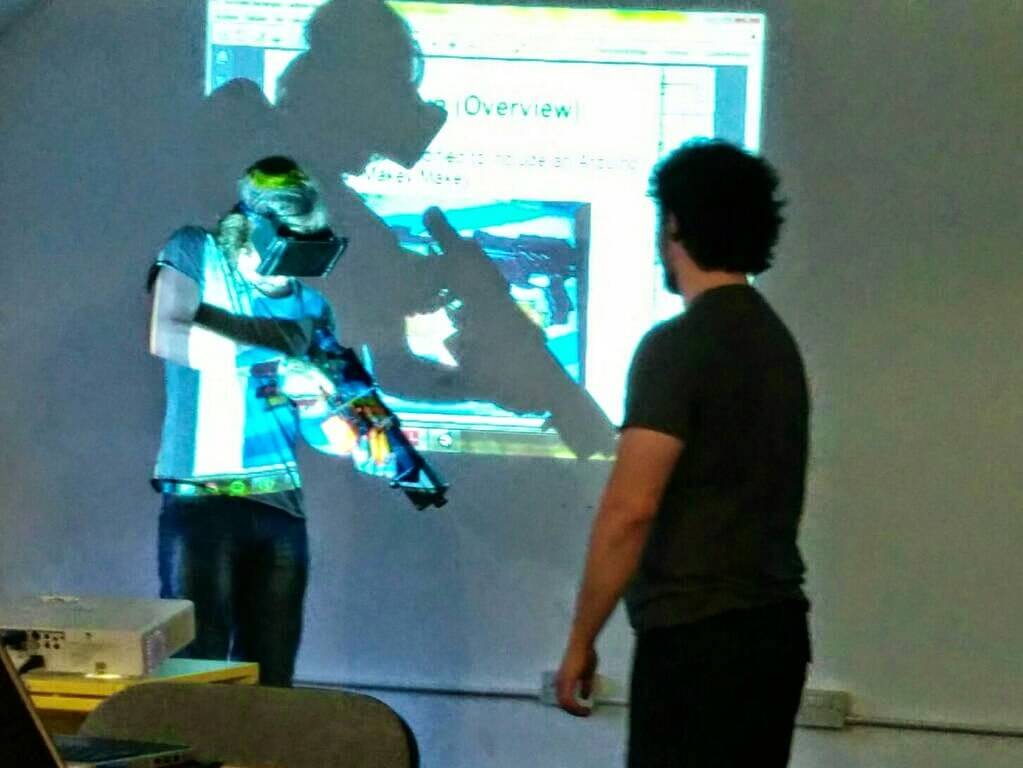

After leaving my VR Gun project for a while I decided to go to HackManchester 2013 and give it the push it deserves by creating not just the Gun, but a full VR experience. In 25 hours I managed to finish the weapon and modify an existing game named Angry Bots to be playable with all the freedom of a wireless system!

I won the “Best Company Project” award from the jury, and also was the finalist (2nd) for the “Best of All” competition. A true honour that motivated me onto polishing and showcasing the project for the Manchester’s Android Meetup months later with huge success 🙂

Ok, so what is this exactly? Basically it is a set of applications to detect the user’s movements to control and display a FPS version of the mentioned game. The user experiences a 3D environment that allows him not only to look around using stereoscopic vision, but walk, jump. crouch, aim… a full VR experience; and most importantly: in Real Time, without any cables and ultra-light, perfect for feeling deeply immersed.

All code can be found in my Github, I will explain here the key parts of the project, which are summarised in the presentation I used or the Android Meetup and can be found here.

The Gun:

The gun used is obviously my VR Gun. I coded the Arduino board so I could actually track the on/off positions (only on first-call) for the trigger and the ammo clip modifying the original’s Makey Makey code.

Through an OTG cable, the board is connected to a Galaxy S3 attached to the Gun itself running a very particular app. The app, inside DataStreamer folder, will listen to the Arduino output and also track the pose. The phone then has all the important information related to the weapon and can send it to the server (game). But not only that! Because the phone is in contact with the gun and knows when the trigger is pressed I also implemented some haptic feedback so, when the user fires the gun vibrates with a nice machine-gun rhythm.

Choosing the right phone is not as easy as it seems:

- It needs to have not only OTG support but to be able to give it a 5V input.

- The pose-detection relays strongly on the gyroscope sensor and, nowadays, it is quite difficult to find information about how good a phone’s gyroscope is. I tried my best to correct any drifting using a version of this code, that brings accelerometer and compass to the mix in order to create a rock-solid pose reading, but can still be problematic in medium/low-end phones. For that reason I included a huge Sync button in the middle of the screen so it is impossible to miss it while playing and will realing the head, hip and head poses.

Galaxy S3 works wonderfully but still there are some scenarios where the user will have to hit the button every 5 minutes or so… until I code a proper solution (already found one but it has not been implemented yet, more in the last paragraph), and the 5V requested from the OTG makes the battery drain quite quicly (1h-2h).

The Movement:

For the movement I used a different phone running a pedometer, also bundled in the DataStreamer app, I created for my old Augmented Reality system. The important thing about this pedometer is that it not only listens to the strength of the steps but the rhythm so it is very resistant against noise, it could even run directly on the helmet! But instead I decided the user will put it in his back pocket, this way it can track the hip orientation and even the butt inclination…. this way the user could walk towards his hip and not his head and even crouch on real time or even lay down in the ground.

Because, as mentioned in the previous point, not all phones have a RT-compliant gyroscope, I decided to put a toggle button to disable the hip-butt pose detection and use the head tracking instead in case the phone is not powerful enough to keep the pose updated without breaking the immersion.

The Headset:

This is the most important part. I created a helmet out of foam during the Hackaton (that later was substituted by a more professional-looking black helmet) that holds a 7″ tabled (Nexus 7) and 2 lenses to the user’s face. The code running here is in the VRUnity folder and contains a Unity3D project. It is the Angry Bots demo game modified in two ways:

- The player has been removed and the camera replaced, after importing the Oculus Rift SDK, with a stereoscopic set of cameras that render the view with the perfect oval shape for the lenses and also track the head’s pose very fast. Since OR is PC only, I had to modify the code a bit so it won’t silently crash on my device. Specifically I commented out all the code related to DeviceControllerManager and the DeviceImposter.

- I included a communication system to allow the DataStreamer app to send data to the game. More in the next point.

The biggest task here was not only finding what was not working with the OR SDK (it was not crashing, it was actually working but my communication system was not.. and it was its fault) but also creating the helmet. There are a few small details to have in count:

- It has to be closed and dark so the light does not distract the eyes.

- The distance eyes-lenses-screen is very important and varies depending on the user. I ended up creating a couple of rails in my last helmet so it was adjustable.

- Breathing is an issue. There has to be an aperture for the nose or the screen/lenses will be steamed in a few seconds.

- It has to be light, but still avoid any kind of wobbling.

The first version was not the best; but after many tries, super-glue, cutting and breaking elastic bands I built a black helmet following all those guidelines.

The Communication:

The way everything works together is thanks to some UDP magic. The DataStreamer apps will bundle the information in Datagram packages and send it to the server (game). Once the game receive the package, it has to parse it and redirect it to the relevant Gameobjects that will apply the information.

The key for having RT here was to use a port for each possible message (fire mode, hip pose, steps, gun pose, etc.) so there is a file defining a set of offsets for the port and each listener will apply it when sending the datagram. On the server side there is one thread running per port, each of these threads is looking for exactly one type of message and as soon as it is received it is processed.

What’s next:

There are a few bits I am still not happy with and I will try to solve at some point.

- I would love to take advantage of the current 3D printers and get a more professional helmet done. I am already talking to a few people and this might be happening soon! In that case I will put the model here.

- Gun drifting. As I mentioned, after a few minutes the gun pose might have drifted a bit. If the tablet in the head had a camera I could set a few LEDs on the gun’s nose, flickering with different patterns, and then track it directly from the game. This will even allow to move the gun in a complete 3D space (when in view). I still want the user to be able to fire backwards, in that case the normal gyroscope reading can be used and when it comes back to the front view the drifting (if any) can be corrected again.

- Jump! I already did a few experiments, but it will be interesting to detect jumps using the pedometer code. So far the results make me feel very dizzy after a couple of tries.

- Use a more professional gun, maybe modify an electronic air-soft gun so the weight and controls feel more real.